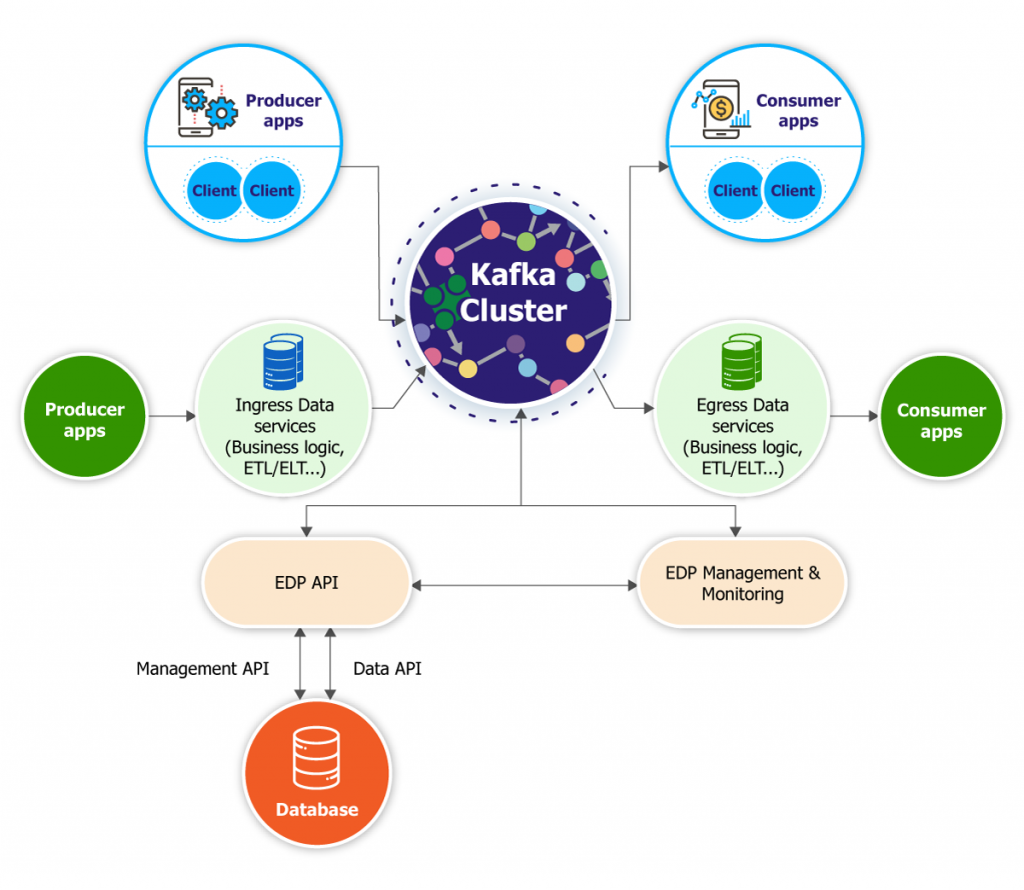

ATSC Event Distribution Platform (EDP), based on Apache Kafka foundation, provides the enterprise-ready platform for real-time and historical event-driven data pipelines and streaming applications. ATSC EDP is designed for efficient data distribution and stream processing with the ability to bridge Kafka applications to other Messaging applications as well as Service-Oriented-Architecture environments. With Kafka technology at the core of our solution, ATSC EDP helps securing, monitoring, and managing Kafka infrastructure for your organization together with our professional services.

Core capabilities

Apache Kafka is an open-source event stream-processing platform developed by the Apache Software Foundation. The goal of the project is to provide a highly scalable platform for handling real-time data feeds. ATSC provides compliment tools & services to build streaming data pipelines and apps with enterprise-class support for Apache Kafka.

The key capabilities of Kafka are:

- Publish and subscribe to streams of records

- Store streams of records in a fault tolerant way

- Process streams of records

The key components of the Kafka open source project are Kafka Brokers and Kafka Java Client APIs.

Kafka Brokers

Kafka brokers that form the messaging, data persistency and storage tier of Kafka.

Kafka Java Client APIs

- Producer API is a Java Client that allows an application to publish a stream records to one or more Kafka topics.

- Consumer API is a Java Client that allows an application to subscribe to one or more topics and process the stream of records produced to them.

- Streams API allows applications to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams.

- Connect API is a component that you can use to stream data between Kafka and other data systems in a scalable and reliable way.

Advanced features

ATSC EDP is designed on Apache Kafka foundation with additional advanced features, and provided with ATSC professional support services. Apart from Apache Kafka available community features, our advanced offerings are designed to complement Kafka infrastructure in various aspects system-wide:

Management & Monitoring

EDP Management & Monitoring is a GUI-based system for managing and monitoring Kafka environment. It allows you to easily manage clusters, to create, edit, and manage connections to other systems. Whereas with open-source Kafka, you have to manually manage all deployment parameters, with or without the help of Zookeeper’s console, now, everything is in a Graphical interface, on your web browser, for all your administration needs. From system deployment to real-time dashboards, we can provide you with built-in components as well as customization capabilities as requested.

Management & Monitoring also has the capability to define thresholds on the latency and statistics of data streams, as well as deliver alerts via email or queried from a centralized system. It also allows you to monitor transaction from producer to consumer, assuring that every message is delivered, and measuring how long it takes to deliver messages (end-to-end monitoring).

Key capabilities include:

- System management, including users/ user groups/ privileges

- Cluster management, including resource, connections with other systems, cluster membership and broker configurations (leader-follower), log…

- Consumer, producer management, including members, published/ subscribed topics

- Topic management, including topic configuration, partition, log, client access control…

- Real-time dashboards for metrics monitoring and management

- Navigate, browse and search through data in Kafka cluster

Ingress/ Egress Data-services

Micro-services have changed the software development landscape. They help making developers more agile by reducing dependencies, such as shared database tiers. But the distributed applications still need some type of integration to share data.

- One popular integration option, known as the synchronous method, utilizes application programming interfaces (APIs) to share data between different users/ applications. These API-based services, either directly or indirectly (via an Message broker or Service bus), is the traditional methods that most systems with monolithic architecture are adopting.

- A second integration option, the asynchronous method, involves replicating data in an intermediate store. This is where Apache Kafka comes in, streaming data from other systems to populate the data store, so the data can be shared between multiple applications. These distributed integrations involve multiple lightweight, patterns-based integrations that can be continuously deployed where required, and are not limited by centralized ESB type deployments. In this manner, Apache Kafka is considered an alternative to a traditional enterprise messaging system.

Part of ATSC EDP agile integration is the freedom to use either synchronous or asynchronous integration, depending on the specific needs of the application. Apache Kafka is a great option when using asynchronous event driven integration to augment your use of synchronous integration and APIs, further supporting micro-services and enabling agile integration.

- Kafka provides native clients for Java, C, C++, and Python that make it fast and easy to produce and consume messages through Kafka. This integration approach required adding Kafka clients to the Producer/ Consumer applications.

- EDP Ingress/ Egress Data-services addresses problems where it isn’t possible to write and maintain an application that uses the native clients (legacy systems that developed/ managed by different parties).

The EDP Ingress/ Egress Data-services makes it easy to work with Kafka from any language by providing a service for interacting with Kafka clusters. Our Data services support all the core functionality: sending messages to Kafka, reading messages, as well as other complex business requirements (data manipulation logic, ETL, ELT…). You can get the full benefits of the high quality, officially maintained micro-services streaming ETL to transform data within the pipeline and cleanly transport them to another system.

Data persistent in Database

While Kafka does have a way to configure message retention, it’s primarily designed for low latency message delivery. Kafka does not have any support for the features that are usually associated with database management systems (DBMSs) or filesystems. As such, using some form of long-term ingestion, such as a NoSQL DB on a distributed filesystem (CFS, HDFS), is recommended instead.

In ATSC EDP, to provide long-term storage, we bundle the capability to store EDP data in database. After messages are received and write into topics, user can have the option to persist them into a DB for other data exploration requirements. This is accomplished by EDP API to read (consume) data from respective partitions & write them respectively to a DB. EDP API harnesses Kafka Connect to provide these kind of integration, but it also relies on specific DB connectors, CDC tools for other advanced requirements

The recommended DB is Apache Cassandra or its commercial version (DataStax Enterprise) because of their distributed and scalability nature. Because Kafka event data always different from application to application, project to project, careful study of data and data modelling are crucial steps when considering direct database integration.

Security

ATSC EDP empowers enterprises with advanced security capabilities that provide fine-grained user and access controls to keep their application data protected and increase compliance to lower business risk. EDP enforces the rule of least privilege for IT systems. Access to designated systems is limited to personnel for whom access is required based on job function.

Event streaming platform is the backbone and source of truth for data systems across the enterprise. Protecting this event platform is critical for data security and often required by governing bodies. Enterprise companies have very strict security requirements, which is why ATSC EDP provides robust and easy-to-use security functionality, including:

- Authentication

Authentication verifies the identity of users and applications connecting to EDP. There are three main areas of focus related to authentication: Brokers, Zookeeper (Apache ZooKeeper™ servers), and HTTP-based services.

- Kafka brokers authenticate connections from clients (producers and consumers) and other brokers using SASL, with support for SASL / GSSAPI (Kerberos), SASL / PLAIN, SASL / SCRAM-SHA-256, SASL / SCRAM-SHA-512, SASL / OAUTHBEARER.

- ZooKeeper supports SASL, mTLS authentication, DIGEST-MD5 and GSSAPI SASL mechanisms.

- All EDP HTTP services support HTTP authentication, mTLS as well as authentication to LDAP.

- Authorization

Authorization allows admin to define the actions clients are permitted to take once connected. For each topic, Kafka provide ACL authorization that permit or deny every client that can access its content. Kafka ACLs are stored within ZooKeeper, and provide a granular approach to managing access to a single Kafka cluster. However, ACLs may prove to be difficult to manage within a large deployment (multiple users, groups, or applications) as there is no concept of roles.

ATSC EDP provides Role-Based Access Control (RBAC), which addresses the above short-comings. RBAC is defined around predefined roles and the privileges associated with those roles. Roles are a collection of permissions that you can bind to a resource; this binding allows the privileges associated with that role to be performed on that resource. Using RBAC, you can manage who has access to specific resources, and the actions a user can perform within that resource.

- Encryption

Encrypting data in transit between brokers and applications (clients) mitigates risks for unintended interception of data across the network.

Authentication and encryption are defined in Kafka per listener. By default, Apache Kafka® communicates in PLAINTEXT, which means that all data is sent in the clear. To encrypt communication, you should configure all the EDP components in your deployment to use SSL encryption, including configure all brokers in the Kafka cluster to accept secure connections from clients.

Enabling SSL may have a performance impact due to encryption overhead. Thus, system capabilities and application performance must be considered beforehand. In some deployment, due to the nature of security compliance ATSC EDP also support encryption of secret values, including passwords, keys, and hostnames in EDP system.

- Monitoring & Audit logging

ATSC EDP centralized logging functionality gives administrators the ability to understand “who looked at what, when” and “who changed what, when”, which is crucial for meeting many security compliance standards. This is accomplished by implement an independent data-store (normally a DB) for all system logs. Every component and client behaviors are regularly logged and push to this data-store using EDP management API for all your audit requirements.

Monitoring functionality is tightly integrated across the components out of the box. EDP M&M collects various metrics from Kafka brokers and produces the values to a Kafka topic and provide multi-cluster, dashboard-style views on admin GUI.

EDP M&M also provides an alerts functionality, which is key for driving awareness of the status of EDP cluster, by defining triggers and actions. Triggers can be defined as thresholds on the latency and statistics of data streams, system conponents. Alerts can be configured to be delivered via email or to another responsive system.

Supported Kafka Clients

- C/C++ Client Library

- Python Client Library

- Go Client Library

- .Net Client Library