Big Data

Big Data Solutions are complex and relatively new to the most of the devs. Despite that, many enterprises dive deep into the big data software development as it is hard to overestimate its value for the business. We at ATSC hope you canfind a comprehensive explanation of the big data applications architecture and resource breakdown for a sample of this kind of project, with our best in its class software & technologies approach.

Highly valuable information is hidden in the huge volume of data that more and more businesses are aware of their importance. Systems were conceived and created to save information so that we can analyze the data and make decisions based on them. Business Intelligence and Data warehouse have proved the importance of data analysis in decision making based on structured data. The traditional databases were conceived to store structured data, queried using SQL languages. Data warehousing and Business Intelligence were the first pioneers for data exploitation and data mining. Data consulting services have provided tools to improve decision making.

In the meantime, cost of server hosting has decreased significantly, more data can be saved. Data become more and more complex as they grew exponentially rather than linearly. Today, structured data represent only 15% of data produced every day, the remaining 85% is unstructured like music, movie etc. A study has found that more data are produced in two days than since the dawn of humanity till 2003. Since SQL can’t query unstructured data, another way to query these data were elaborated and NoSQL database has emerged.

FROM STRUCTURED TO UNSTRUCTURED DATA

Even if a very large data warehouse can be conceived, they are limited to structured and semi-structured data. The BI consists of a set of tools and techniques for collecting, cleaning and enriching structured or semi-structured data to store in different forms of SQL-based, multidimensional databases. The data will, therefore, be managed in standardized formats to facilitate access to information and processing speeds. Business Intelligence aims to produce KPI to analyze past and present data in order to predict the future using extrapolation and act based on these forecasts.

Building a data warehouse from scratch is no easy task. A large project such as this requires more than a year of setup, configuration, and optimization before it is ready for business intelligence purpose.

But remember, your database warehouse is only one aspect of your entire data architecture:

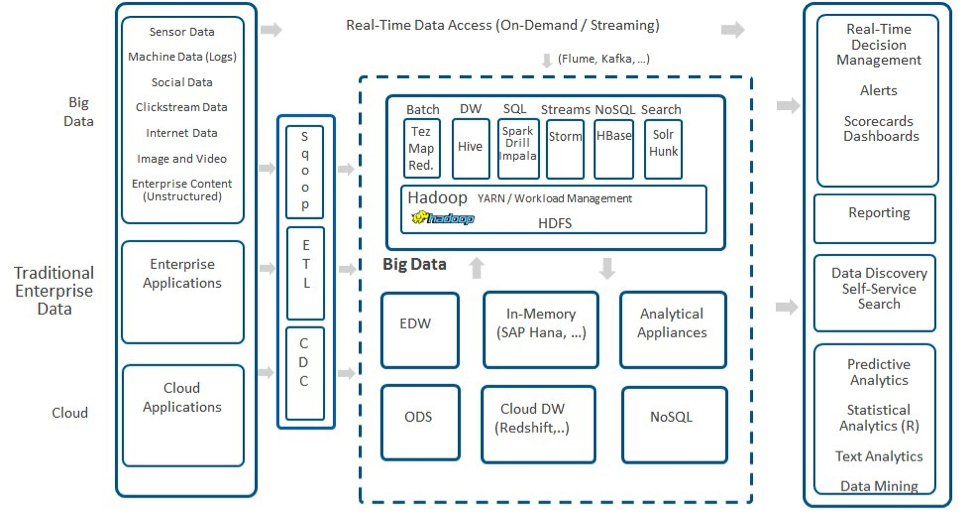

Typical Big Data Architecture

It is no surprise then, that according to Gartner, over 60 percent of all big data projects fail to go past the experimentation stages and are subsequently abandoned.

Amazon conducted a study on the costs associated with building and maintaining data warehouses, finding expenses can run between $19,000 and $25,000 per terabyte annually.That means a data warehouse containing 40TB of information (a modest repository for many large enterprises) requires a yearly budget of about $880,000 (close to $1M), assuming each TB requires $22,000 in upkeep.

What’s behind these costs and should they be worrisome to a business? While storage is growing more affordable every year, engineering (the setup and maintenance) of data warehouses is what lies at the heart of the issue. IT leaders, Architects and others in charge of developing data warehouses often encounter the following challenges, which add up to real and opportunity costs that affect the bottom line

REDUCING COSTS THROUGH BIG DATA INTELLIGENCE PLATFORMS

Running BI tools directly on Hadoop and other Big Data systems entails using Big Data intelligence platforms to act as mediators between BI applications and enterprise data lakes. The technology uses standard interfaces such as ODBC and JDBC that connect BI applications to various Hadoop ecosystems.

Big Data intelligence platforms provide data analysts with virtual cubes that represent the data within Big Data ecosystems. Analysts may use SQL, XMLA or MDX to create dimensions, measures and relationships as well as collaborate with other modelers to share, adjust and update models. For instance, an analyst using Excel could apply MDX queries, while another working in Tableau can employ SQL.

At their core, Hadoop intelligence platforms eliminate the need to extract, transform and load data to perform BI workloads through adaptive caching. This technology provides the responsiveness BI professionals expect when applying queries without introducing the need to create off-cluster indexes, data marts or multidimensional OLAP cubes. Instead, adaptive caching analyzes end-user query patterns and learns which aggregates users would want to pull.

The aggregates sit on the Hadoop cluster. In addition, the adaptive caching system handles the entire aggregate table lifecycle, applying updates and decommissioning as required. Ultimately, it eliminates the need for IT to migrate data to off-cluster environments.

But what about IT control? Hadoop intelligence platforms connect directly to secure Hadoop services, providing support for delegated authorization, Active Directory, Kerberos, SASL, TLS and other security protocols. In addition, administrators can monitor cube access and queries to ensure they’re effectively enforcing role-based access controls.

THE TRANSITION

With the technology available today, investing in proprietary cost intensive big data warehouses is no longer the only option. And neither is incurring the time, maintenance, and labor costs associated with constantly moving data into warehouses built for business intelligence queries.

It was to handle this important amount of data and especially this huge amount of unstructured data that big data was born. It has become very popular, and in recent years, it is more relevant than ever to master it.

And at ATSC, traditional data consulting services have evolved and have integrated big data with their services. They have become big data consulting services. Let’s dig a little further to better understand what big data is, why has it become the tendency, what is big data solution, and especially what could be the cost of implementing big data solutions.

BIG DATA TRENDING

Technology has evolved so fast, now we are talking about petabytes to evaluate stored data when a few decades ago, megabyte seems to be a big revolution. Today thanks to IoT (Internet of Things) there are even more and more interconnected objects, the estimation is over 10 billions of interconnected objects to date.

Technology has evolved so fast, now we are talking about petabytes to evaluate stored data when a few decades ago, megabyte seems to be a big revolution. Today thanks to IoT (Internet of Things) there are even more and more interconnected objects, the estimation is over 10 billions of interconnected objects to date.

Even since 2014 when McKinsey reported that 131% is the percentage of ROI that companies obtain, compared to their competitors, when their managers and executives have access to an adequate analysis of the data from big data. This difference is likely to be more important today and it is increasing every day as we are getting more familiar and well trained on big data and are more experienced in implementing big data solutions.

Data find their sources from diverse platforms like social media producing huge quantities of data: individual information, link sharing, blogging, etc; applications: geo-localization, application logging, etc.

Several applications of big data are developed and implemented and AI has become an ally of big data.

APPLICATION FIELDS

The data recorded relate to several domains, all the businesses can find the information they want. Currently, the field of use of big data solutions is quite large. These fields include:

- Telecom is known as big data solutions for telecom

- Media and Entertainment

- Finance

- Government

- Retailing

- Health

- Energy

The big data is used by companies and businesses to:

- Help in decision making: use of real-time and accurate data for an informed decision, decisions are based on facts not on guts

- Help with the customer segmentation

- Offer a fully-customized service to customers

- Manage stocks and predict needs in products, predict ideal product to be stored

- Help in understanding customers’ needs, behaviors and habits

- Help to better manage customer relationship, offering more customer-centric products

- Help in fraud prediction

- Help improving business process

- Help identify and remove performance bottlenecks proactively, optimize resource utilization, and reduce costs.

- Reduce billing errors, verify eligibility, detect and prevent fraudulent claims and speed-up revenue recovery.

BIG DATA USAGE

Big data finds its strength in analytics and offers a very powerful tool for decision making. Now that we understand better what is big data solution, we may wonder behind these theories, how big data is really operating in the real world and what are these real-world uses.

Media (videos, audios, etc.) today represent an important part of the data stored to date. It is not surprising that one of the uses in the real world is in the marketing of video content. Netflix is a good example of big data solutions’ use. It has to this day millions of customers.

Customer management: when offering content to its customers it records their behaviors in order to offer them personalized programs and contents:

- What they watch

- The time at which they watched the videos

- How the video was seen (in a continuous or discontinuous way, the complete video has been watched or has it been watched partially, was it looped and how many times was it seen etc.)

- How they interact with the video

- What are their appreciations if any

- How did they react at the end of the video

Telecom is one of the fields that mostly exploit big data today, big data solutions for telecom are essential:

- customer management (how to acquire and retain customers): behavioral analysis using data science, identifying proactively potential “churners” using a predictive model, targeted marketing (tools to perform customer segmentation profiling and offering areas of improvement), personalized plans

- operation management: call routing optimization (using IVR or direct routing to customer services, agent-specific routing depending on the end user to allow better user experience etc.), capturing all calls and events (CDR) in real time, capacity optimization by forecasting demands, marketing based on geo-localisation (data centers are implemented in regional basis allowing a real-time analysis and approach)

- network and infrastructure management: proactive maintenance, failure prediction by detecting an anomaly

- security management: detect and prevent fraud, securing payment, protecting data

Adopting data-driven approach increases customer interaction and improves customer satisfaction.

TECHNOLOGIES

In big data concept, we are collecting huge and complex data sets collected, processing them requires specific tools and applications. Part of the complexity lies in the fact that the data volume is very large and is mostly unstructured.

The first challenge is to find the best way to address data restructuring. The required big data software solutions should support this data restructuring. The second challenge lies in data storage, choosing the fittest databases to optimize the storage for future analysis.

With ATSC’s vast real-world experience and enthusiast experts, we can offer our customer with a renovated platform that come in trending in terms of big data software solutions:

- Apache Hadoop: Apache Hadoop is one reference when it comes to big data frameworks, it brings solution where traditional data exploitation tools are very limited in tackling unstructured data. Hadoop is a free and open source framework used to store data and process data. Thanks to its data replication, Hadoop provides high availability. Hadoop implements MapReduce framework, which allows large data processing by distributing the computation on several nodes. Hadoop is a very mature big data solution, it has been used by several companies. Hadoop allows big data agile development.

- AWS is service provided by Amazon, used to store large amounts of data accessible online for other hosting applications. AWS has many advantages such as security, reliability, flexibility, and scalability. AWS is now very popular among companies, making the skills in this technology very on-demand.

- Cloudera offers a centralized data storage making data analysis easier, software needed is delivered in single package reducing considerably installation time.

- NoSQL database: Since we are dealing with any types of data, the database should adapt to the absence of structure. The specificity of this kind of database is that no specific schema is required to store data in the database. The most popular NoSQL databases used are: Apache Cassandra, Oracle NoSQL, HBase, MongoDB, Amazon SimpleDB

- Tableau is an analytics tool transforming data into insights.

- Talend Big data Integration: it is a big data software solution including graphical tools and components that generate code allowing big data software development team to work with other big data software solutions and technologies like Apache Hadoop, and NoSQL databases

- etc…

ARCHITECTURE OF BIG DATA

We have seen there are several big data solutions, big data solutions for telecom is among them. It is hard to generalize the architecture of the Big data applications as well as how the data itself is structured there. A very simple example of the solutions is given on the scheme below for your general understanding how different modules are connected and integrated with each other to do what Big data is supposed to do — provide users with reports and the analytics based on the huge massive of the information from various sources received by this kind of the software.

ATSC architecture example

In this section, let’s take a simpler example of applications of big data such as a car rental services. When deciding to scale a car rental service, there are many challenges that we may face.

- Reliability of partners (car owners, other businesses)

- The success of the scale tentative

- Control over users

- Competition

- Reliability of the renter

- Personalized offers for customers based on their habits, their budget etc.

Taking profit of big data solutions, these challenges can be tackled intelligently with a real-time solution:

- Management of historical data regardless of the provenance

- Time processing allowing a real-time analysis and offering a real-time solution

- Solutions that can scale in a straightforward way and very fast

- A solution providing fast delivery and almost immediate input/output

- Solution able to handle huge amounts of data, good performance

- Solution focused on business

- Cost savings and even a better ROI

- IT resources leverage

- Enterprise-ready solution

- Complete production environment

- Rate management

- Preventing loss

- Evaluate customers satisfaction

- Accident management

The solution includes the following components and layers:

- Sources of big data: the tasks in this component consist of understanding the data provenance, at this point data scientist will clarify their needs in terms of data in order to identify the data required for their analysis. The structures and formats of data needed may vary considerably from analysis to another and even in one axis of analysis, there may be a big variety of structures and data formats. It is important to understand the speed at which data arrive and evaluate its volume to predict the amount of data analyzed for each analysis. In this layer, we should be able to understand the restrictions regarding the data analysts may need.

- Data acquisition layer: at this point, the process consists of acquiring data from previously identified data sources. Transformation occurs here, ETL-like transformation to extract data from their sources, transform them to fit the format needed for data analysis and then load in Hadoop Distributed File System store or a traditional RDBMS. After this first processing, some further processing may also occur.

- Analysis layer: Once the data are identified and processed, analysis can begin. It may happen that access is made directly on the data source depending on the analysis that needs to be done. At this point, support for decision making is developed, we are producing analytics using the best tools and algorithms to do the analytics

- Consumption layer: In this layer, the analysis provided is consumed, analysis can be displayed in applications or entered into another business processes.

COST TO BUILD A BIG DATA SOFTWARE SOLUTION

Big data applications are slightly different from traditional applications. They are data-driven, taking advantages of the huge amount of data and then working on getting the valuable information underneath. The development process is also quite different but there is at least one common component for a successful project for both traditional and big data project. This common denominator is the methodology advocated for its implementation, Agile methodology is more than ever relevant. Big data agile development is a must in order to have a successful implementation.

A good strategy should be taken into consideration while building big data solutions. Not every strategy will work as we are addressing a very specific area. Big data strategy consulting can be very helpful for companies so that they can avoid wrong investments in solutions and infrastructure that do not really meet their needs.

In the process of building a big data application, the following approaches are used:

- Development of analytic strategies to identify hidden advantage of a large amount of data for more competitiveness

- Development and deployment of analytics tools: to identify possible bottlenecks, performance or production issues

- Development and deployment of applications that support the decision making

It can involve much more actors than those listed above due to the inner complexity that lies in big data.

Big data consulting services are not as numerous as traditional software development consulting services. Developers that worked with big data skills are currently really scarce, not every developer has the necessary qualification for big data job. Many of them aren’t yet familiar with the complexities that lie in deploying, managing and tuning of the applications. Fortunately, the infrastructure is getting simpler so that the focus can be on the building of applications that are of interest to companies. big data consulting offers skills and allow companies to focus on their core business in a way that they can benefit from big data without having to engage their human resources in setting up big data solution.

Big data applications can be built on the top of existing platforms and systems. building big data applications follows the following processes:

- Design and infrastructure: designing the architecture of the big data application to be implemented. The design is based on several assumptions.

- Hardware and network configuration, and implementation: Once the application to be built is designed, hardware and software configuration are done.

- System development, integration, training: big data experts who are going to build the application are trained so that they have a better understanding of the company’s needs and goals to be able to implement the best solution. Application development starts following big data agile development methodology. Starting from an assumption, line of codes are written, every data can lead to new assumptions that lead to new lines written. It is really important to work in a very small increment developing one single assumption at a time, the assumption is analyzed, developed into actionable business information. Being Agile is really important because learning from feedback is one important aspect, concept and advantage of big data. Feedbacks help evaluate if direction taken was the right one or if we should adjust our direction during the following iteration.

- Test of integrated system and launch in the production environment: the output developed from assumption is tested and if the test is positive it will be pushed into the production environment.

- Improve infrastructure: from iteration made and integration to live system, we can work on improving infrastructure so that we can have a more reliable infrastructure

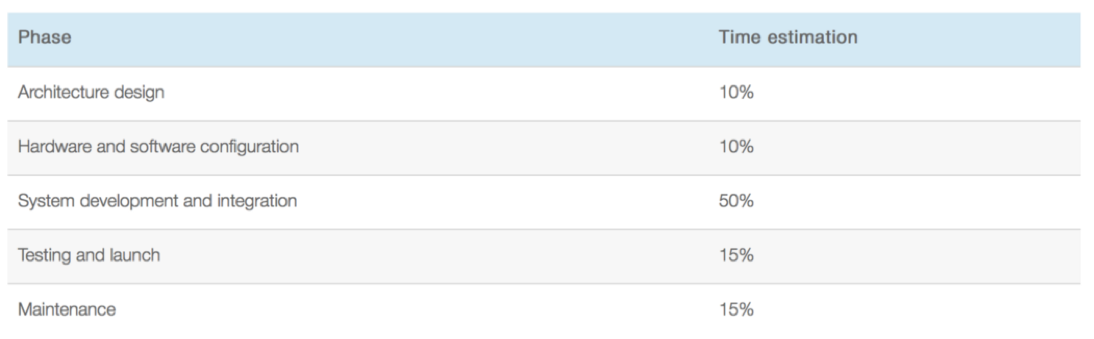

The following estimation doesn’t take into account the infrastructure and hardware needed to implement big data. We should also remember that as data evolve the costs may evolve as well.

We are going to consider in the following estimation the cost of building big data application which takes into consideration the following endeavor:

- Improvement of partners’ reliability: acting on data about car owners, businesses’ partners

- The success of the scale tentative: how to take profit of data to increase the scope of car rental service’s business: address a wider customer.

- Competition: analyze data to be more competitive, identify strengths and weaknesses.

- Renters’ reliability: define and measure reliability for safer operations. Identify the KPIs that define reliability of renters retrieve them from data and analyze them to identify the cause of potential failure etc.

- Personalized offers for customers based on their habits, their budget etc.: understand how to tailor services that best meet the needs and budget of users.

Six-month iterations will be done in order to improve the above actions. Let’s refer to this first iteration as the big data car rental iteration. The following estimation takes into consideration the afore-mentioned goals done in a six months scope.

Since this is the first set-up, the delivery time for each phase and type of actions is as follows:

From these processes, we can identify the following actors involved in the process of building a big data application:

- Computer analysts

- Business analysts

- Developers engineers

- Software developers

- Database analysts

- Data administrators

- Mathematicians

- Statisticians

- Data analysts

- System administrators

- ETL developers

The big data software development team can be composed in several ways with the actors mentioned above. The development of a first iteration needed for actions previously will cost:

- 2 System administratorsworking full time 6 months

- 8 developer engineersworking full time for 6 months

- 4 database analystsand/or DBA working full time for 6 months

- 4 data analystsworking full time for 6 months

- 1 big data project managerworking full time for 12 months

- 4 QA testerworking full time for 4 months

Assuming the project duration is about 01 year for this phase.

If we consider continuing in pursuing big data exploration with an additional iteration, we repeat from system development to infrastructure improvement, there is no cost or a very low cost for infrastructure design and hardware configuration once it is first set.

There is no limit in big data solution building, it is an ongoing process where every output leads to another axis of improvement.

(ATSC BigData solutions team.)

Recent Posts

- Build a Text and Image Search App with Astra DB Vector Search, NodeJS, Stargate’s New JSON API, and Stargate-Mongoose

- Introducing the Kubernetes Autoscaling for Apache Pulsar Operator and the KAAP Stack

- Introducing DataStax GPT Schema Translator: Streamlining Real-Time Data Pipelines Using Generative AI

- Migrating from SQL to NoSQL with Spring PetClinic and Apache Cassandra

- Three Approaches to Migrate SQL Applications to NoSQL Powerhouse, Apache Cassandra®